News | All about the Nvidia RTX 30 reveal

This is all about last night's Nvidia RTX 30 # UltimateCountdown event. After months of speculation, growing hype and bottomless leakage, Nvidia finally revealed the next series of GeForce video cards in their livestream. This is what we know about the RTX 30 generation.

Anyone who has received recent Hardware Update articles could already paint a nice picture of what Tuesday evening would bring us. Among other things, the pricing, physical size and the global launch window leaked early, most of which has now only been officially confirmed.

Nvidia’s CEO Jensen Huang revealed the first trio of Ampere cards in the live stream, starting with the RTX 3080 flagship, the RTX 3070 mid-engine and the bizarre luxury RTX 3090 powerhouse, a straightforward continuation of Nvidia’s enthusiast Titan range.

The rumors were therefore not far off in terms of availability. The GeForce RTX 3080 is due to launch from September 17, followed a week later by the RTX 3090. The RTX 3070 will follow after that, sometime “from October”. Not surprisingly, there was not yet a GeForce RTX 3060. In the previous Turing generation, the service budget card also took longer, something that now seems to be the standard at Nvidia.

Nevertheless, Huang’s homely presentation focused mainly on the leap that is being made from the Turing series. The Ampere cards are mainly presented as a continuation of the first RTX cards, with better implementation of the techniques introduced two years ago.

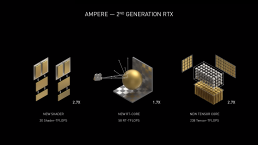

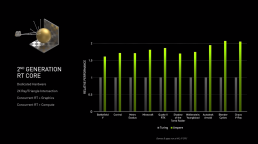

Ampere cards need to be 1.5 to twice as powerful in ray tracing and 4K scenarios, thanks in part to second-generation RT cores, third-generation Tensor cores and growing synergy with AI software such as DLSS 2.0. Read: more powerful, but also smarter with that power, according to Nvidia itself.

Nvidia’s “biggest generational leap to date” means that the RTX 30 series is also many times more profitable financially than its predecessors. For example, the RTX 3070 has the same target price as that of the RTX 2070 Super, but it must be just as powerful in games as the RTX 2080 Ti, which still cost more than twice as much. In that sense, this generation delivers more bang for your bucks, especially in view of 4K performance and ray tracing.

Further details that have become certain since last night are the RTX 3090’s unique cooling design, with fan on both the top and bottom, and smaller PCB, the inclusion of Micron’s 24GB and 10GB of blazing-fast GDDR6X memory. and the partnership with Samsung for their 8nm transistor architecture.

Where Huang spared few words, the power requirements of the top segment turned out. Earlier, a unique 12-pin power connector for the GeForce RTX 3090 leaked, which was already confirmed in an earlier announcement from Nvidia last week.

In that case, a unique power adapter appears to be neatly included, but a “new power supply” does not necessarily have to be the case. The RTX 3090 draws 350 Watts, according to Nvidia’s official website, while the RTX 3080 and RTX 3070 draws 320 Watts and 220 Watts respectively. Nvidia recommends “only” a 750 Watt power supply, although 650 Watt would also suffice for the RTX 3070.

Less important to the quarter of a million avid Twitch viewers, but also worth mentioning: Nvidia’s focus on optimization around the graphics engines. Huang made it clear during his presentation that Nvidia also wants to continue to work continuously to accommodate gamers and other technology.

First of all, there are the content creators who can use the Nvidia Broadcast software for free from this month, which must work with all RTX cards. The program uses the smart Tensor cores to, for example, provide your microphone output with efficient noise reduction directly or to dynamically polish your webcam images and remove backgrounds – without additional equipment such as a depth sensor or green screen. Well-known concepts, but now a bit smarter with your (very expensive) video card.

If you are a fan of in-game storytelling, then Nvidia comes with an even more niche-focused piece of software. The Omniverse Machinima App is somewhat like Nvidia’s Ansel implementation for screenshots, but makes that principle applicable to video as well. Using previously researched AI and development techniques, it is now also easier for internet heroes to create unique videos, provided that games support the application.

In that case, in-game characters can be animated using webcam recordings, lips can be synchronized with audio files only, and fancy particles can be added to typical game recordings. That is all equally important for everyone, but it underscores how much craziness is feasible nowadays with your video card. It is unknown when Omniverse Machinima will roll out exactly and which games want to implement the application (besides Mount & Blade II: Bannerlord).

For esports athletes, Nvidia is committed to lowering latency and increasing frame rates with a new generation of G-Sync Displays. These new displays feature 360Hz IPS panels, Nvidia’s so-called Reflex Latency Analyzer (a hardware-driven optimization of the rendering pipeline) and the option to plug your mouse directly into the monitor.

It is still unclear exactly how the latter works, but the objective is clear: Nvidia wants to minimize input delay in every possible way. In any case, Acer, Alienware, ASUS and MSI are ready to put such a G-Sync monitor on the market “this fall”.

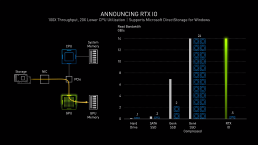

The optimization that will benefit all PC gamers can be found in RTX IO – a new form of decompression for game files. As the demand for 4K textures and in-game detail grows, more and more CPU cores are needed to “unpack” all those packets of game data. Nvidia hopes to ease that pipeline too, using their new storage method. Think of it as Nvidia’s answer to Sony’s blazing-fast PlayStation 5 promises with their unique SSD and accompanying compression.

In the case of RTX IO, the files are not extracted by the central processor, but by the GPU itself, so that the data can also be transferred to the video memory faster. This should lighten the CPU load by twentyfold, and it works according to Microsoft’s DirectStorage protocol. A straight example of how this works or which loading times can be eliminated with RTX IO, unfortunately did not materialize last night.